In the future, computers will not crash due to bad software updates, even those updates that involve kernel code. In the future, these updates will push eBPF code.

Friday July 19th provided an unprecedented example of the inherent dangers of kernel programming, and has been called the largest outage in the history of information technology. Windows computers around the world encountered blue-screens-of-death and boot loops, causing outages for hospitals, airlines, banks, grocery stores, media broadcasters, and more. This was caused by a config update by a security company for their widely used product that included a kernel driver on Windows systems. The update caused the kernel driver to try to read invalid memory, an error type that will crash the kernel.

For Linux systems, the company behind this outage was already in the process of adopting eBPF, which is immune to such crashes. Once Microsoft's eBPF support for Windows becomes production-ready, Windows security software can be ported to eBPF as well. These security agents will then be safe and unable to cause a Windows kernel crash.

eBPF (no longer an acronym) is a secure kernel execution environment, similar to the secure JavaScript runtime built into web browsers. If you're using Linux, you likely already have eBPF available on your systems whether you know it or not, as it was included in the kernel several years ago. eBPF programs cannot crash the entire system because they are safety-checked by a software verifier and are effectively run in a sandbox. If the verifier finds any unsafe code, the program is rejected and not executed. The verifier is rigorous -- the Linux implementation has over 20,000 lines of code -- with contributions from industry (e.g., Meta, Isovalent, Google) and academia (e.g., Rutgers University, University of Washington). The safety this provides is a key benefit of eBPF, along with heightened security and lower resource usage.

Some eBPF-based security startups (e.g., Oligo, Uptycs) have made their own statements about the recent outage, and the advantages of migrating to eBPF. Larger tech companies are also adopting eBPF for security. As an example, Cisco acquired the eBPF-startup Isovalent and has announced a new eBPF security product: Cisco Hypershield, a fabric for security enforcement and monitoring. Google and Meta already rely on eBPF to detect and stop bad actors in their fleet, thanks to eBPF's speed, deep visibility, and safety guarantees. Beyond security, eBPF is also used for networking and observability.

The worst thing an eBPF program can do is to merely consume more resources than is desirable, such as CPU cycles and memory. eBPF cannot prevent developers writing poor code -- wasteful code -- but it will prevent serious issues that cause a system to crash. That said, as a new technology eBPF has had some bugs in its management code, including a Linux kernel panic discovered by the same security company in the news today. This doesn't mean that eBPF has solved nothing, substituting a vendor's bug for its own. Fixing these bugs in eBPF means fixing these bugs for all eBPF vendors, and more quickly improving the security of everyone.

There are other ways to reduce risks during software deployment that can be employed as well: canary testing, staged rollouts, and "resilience engineering" in general. What's important about the eBPF method is that it is a software solution that will be available in both Linux and Windows kernels by default, and has already been adopted for this use case.

If your company is paying for commercial software that includes kernel drivers or kernel modules, you can make eBPF a requirement. It's possible for Linux today, and Windows soon. While some vendors have already proactively adopted eBPF (thank you), others might need a little encouragement from their paying customers. Please help raise awareness, and together we can make such global outages a lesson of the past.

Authors: Brendan Gregg, Intel; Daniel Borkmann, Isovalent; Joe Stringer, Isovalent; KP Singh, Google.

This week we’re vacationing at the family cabin on an island; the nearest town is Gibsons. Mid-week, we hit town to pick up groceries and hardware. Unfortunately, it’s a really demanding walk from the waterfront to the mall, particularly with a load to carry, and there’s little public transit. Fortunately, there’s Coast Car Co-op, a competent and friendly little five-car outfit. We booked a couple of hours and the closest vehicle was a 2009 Ford Ranger, described as a “compact pickup” or “minitruck”. It made me think.

Think back fifteen years

I got in the Ranger and tried to adjust the seat, but that was as far back as it went. It didn’t go up or down. There were no cameras to help me back up. There was nowhere to plug my phone in. It had a gearshift on the steering column that moved a little red needle in a PRNDL tucked under the speedometer. There was no storage except for the truck bed. It wasn’t very fast. The radio was just a radio. It was smaller than almost anything on the road. I had to manipulate a a physical “key” thing to make it go. I bet there were hardly any CPUs under the hood.

And, it was… perfectly OK.

The rear-view mirrors were big and showed me what I needed. It was dead easy to park, I could see all four of its corners. There was enough space in the back to carry all our stuff with plenty room to spare. You wouldn’t want to drive fast in a small tourist town with lots of steep hills, blind corners, and distracted pedestrians. It wasn’t tracking my trips and selling the info. The seats were comfy enough.

Car companies: Dare to do less

I couldn’t possibly walk away from our time in the Ranger without thinking about the absolutely insane amounts of money and resources and carbon loading we could save by building smaller, simpler, cheaper, dumber, automobiles.

Click here to go see the bonus panel!

Hovertext:

We should be measuring happiness of CATS.

Today's News:

Click here to go see the bonus panel!

Hovertext:

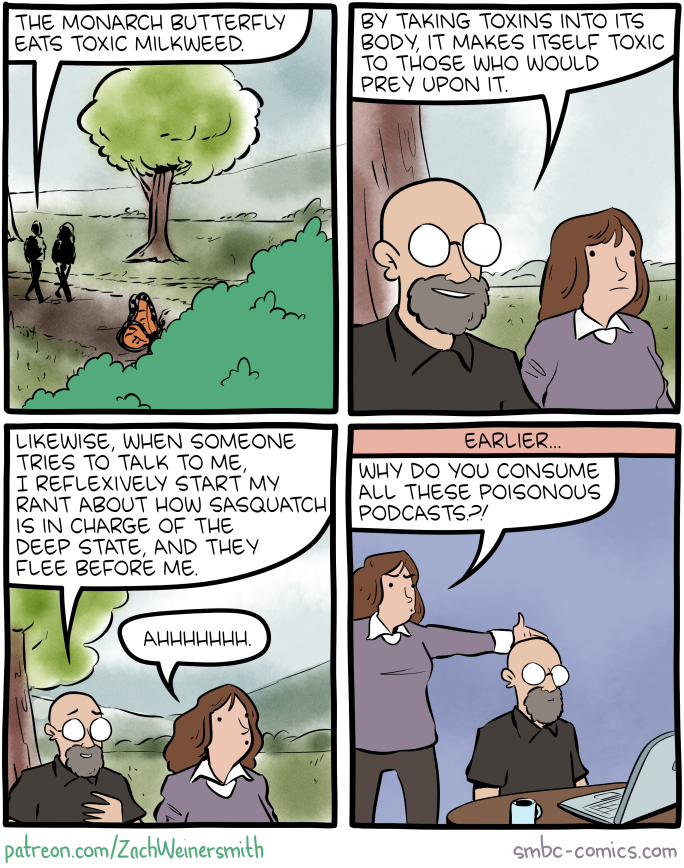

Remember when conspiracy theories were mostly just funny?

Today's News: